Imagine this: it’s late at night, and you’re troubleshooting a critical issue on a production Linux server. Under pressure, you type out a command to clean up some unnecessary files, something you’ve done countless times before. But in your haste, your fingers betray you. Instead of targeting a specific directory, you accidentally execute:

rm -rf /

For a moment, there’s silence. Then panic sets in as your terminal floods with messages about files disappearing. Before you can stop it, critical system files are gone, the server is unresponsive, and your work comes to a screeching halt.

I wish I could say this is just a nightmare scenario, but it happened to me. That single accidental command wiped out an important server, leading to hours of downtime, frantic recovery efforts, and a hard lesson in Linux administration.

In this article, I’ll share how I turned this painful experience into a wake-up call to implement safeguards that prevent such disasters. Whether you’re a seasoned sysadmin or a Linux enthusiast, these tips will help you protect your systems from the destructive power of rm -rf /.

Here are several approaches to mitigate this:

1. Add the “— preserve-root” option

The --preserve-root flag is a safeguard built into modern versions of the rm command to prevent the deletion of the root directory (/). By default, this option is enabled, but you must double-check and explicitly set it for added safety.

When --preserve-root is enabled, any attempt to execute rm -rf / is blocked, and you receive the following warning:

rm: it is dangerous to operate recursively on ‘/’

rm: use — no-preserve-root to override this failsafe

Ensure rm uses this flag by default:

alias rm='rm --preserve-root'Add this alias to your shell configuration file (e.g., ~/.bashrc or /etc/bash.bashrc):

echo "alias rm='rm --preserve-root'" >> ~/.bashrc

source ~/.bashrcThis preventsrm -rf / from running unless explicitly overridden with –no-preserve-root.

💡NOTE: This works only for the root directory. It won’t protect other directories from accidental deletions.

2. Use aliases

Replacing the default rm command with an interactive version adds a layer of protection by prompting users for confirmation before deleting files.

Aliasrm with the -i (interactive) option:

alias rm='rm -i'This forces rm to ask for confirmation before deleting files, reducing accidental deletions.

💡NOTE: This can slow down intentional deletions when dealing with a large number of files. To delete such files, users can bypass the alias by calling /bin/rm directly.

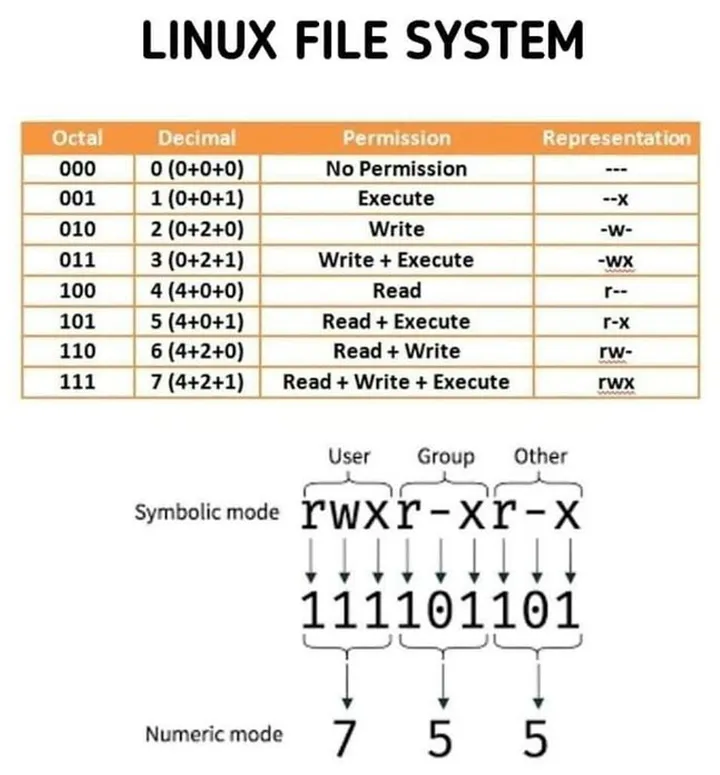

3. Add permissions to critical files and directories

By marking critical files or directories as immutable, even root users cannot modify or delete them unless the immutable attribute is explicitly removed.

Use chattr (Change Attributes) to make directories/files immutable:

chattr +i /important/file/or/directoryTo verify the immutable attribute:

lsattr /important/file/or/directoryOutput will include an i flag indicating immutability.

To remove the immutable attribute:

chattr -i /important/directoryWhen attempting to delete an immutable directory, even with rm -rf, you’ll encounter an error:

rm: cannot remove '/important/file/or/directory': Operation not permitted💡NOTE: The command must be applied manually to specific files or directories. If forgotten, it can complicate legitimate administrative tasks.

4. Implement Command Restrictions

Creating a custom wrapper function for rm in your shell configuration file can block destructive commands or introduce additional checks.

1. Define a custom `rm` function in your shell configuration file:

rm() { if [[ "$@" =~ "/ --no-preserve-root" ]]; then echo "Command not allowed." return 1 fi /bin/rm "$@" }2. Add the function to your configuration file:

echo 'rm() { if [[ "$*" =~ "/ --no-preserve-root" ]]; then echo "Command not allowed."; return 1; fi; /bin/rm "$@"; }' >> ~/.bashrcThis function intercepts calls to rm and checks the arguments. If it detects dangerous patterns like rm -rf /, it prevents execution and outputs a warning.

💡NOTE: Executing the command /bin/rm directly will bypass this function.

5. Limit Privileged Access

Avoid running commands as root unnecessarily. Use tools like sudo for minimal privilege escalation. Regular users won’t have permission to delete critical directories.

6. Monitor and Educate

Train users and administrators on the dangers of destructive commands. Use tools like auditd to monitor command usage.

7. Backup Regularly

Always have a reliable backup system in place (e.g., rsync, duplicity), ensuring quick recovery from accidental deletions.

Example Test

To test the safeguards, try runningrm -rf / on a test machine with--preserve-root enabled or thechattr restrictions applied. It should result in the following output:

rm: it is dangerous to operate recursively on '/'

rm: use --no-preserve-root to override this failsafe

Key Takeaway

Combining these options provides layered protection against accidental deletions, ensuring both safety and flexibility. For production environments, pairing these measures with regular backups creates a robust safeguard against disasters.

Final note: if you liked this article, don’t forget to like and share it. Also, follow me on Medium.com and Instagram Threads @techbyteswithsuyash.

Have a good day and talk to you in another article!!